Following Directions

Consider the below exchange from Twitter:

In Zuegel’s thought experiment, we want to use a simulated model economy to evaluate the actions of central bankers. But, you could also imagine wanting to test the accuracy of the model by seeing how it responds to the actions of the central bankers (eg: a model where a central banker raising interest rates, ceteris paribus, increased consumption might make us doubt the model instead of us using that outcome to evaluate the central banker’s competence). Do we measure the central banker’s competence by how they perform in the simulated world, or do we measure the fidelity/accuracy/reality of the simulated world by how well it responds to the actions of the central banker? Which way does the direction of authority flow? From simulation to user or from user to simulation?

G.E.M Anscombe anticipated this question in the 1957 in her work Intention:

Let us consider a man going round a town with a shopping list in his hand. Now it is clear that the relation of this list to the things he actually buys is one and the same whether his wife gave him the list or it is his own list; and that there is a different relation when a list is made by a detective following him about. If he made the list itself, it was an expression of an intention; if his wife gave it him, it has the role of an order. What then is the identical relation to what happens in the order and the intention, which is not shared by the record? It is precisely this: if the list and the things that the man actually buys do not agree, and if this and this alone constitutes a mistake, then the mistake is not in the list but in the man’s performance (if his wife were to say: ‘Look, it says butter and you have bought margarine,’ he would hardly reply: ‘What a mistake! we must put that right’ and alter the word on the list to ‘margarine’); whereas if the detective’s record and what the man actually buys do not agree, then the mistake is in the record.

Do we judge the man’s actions by the items on the list or the accuracy of the list by the man’s actions? When our ruler says that our supposedly 8.5 x 11 piece of paper is 8.5 inches by 13 inches, do we complain to the paper manufacturer or the ruler manufacturer? When your iPhone fails to recognize your face, do you tweak the facial recognition algorithm or should the phone be turned over to a lost and found? There’s no objective singular fact-of-the-matter of how to use the list; rather, it’s proper usage will depend on our disposition towards it. The detective uses the list to record the husband’s actions; the wife to command the husband on how to act. When an AI makes a judgement, we can either use that to judge the competence of the AI or we can take it as a guide for how to act.

One possible way around this apparent ambiguity would be to program our AIs in such a way that we never need to wonder if they are competently making judgements. If we knew a priori that an AI’s judgements would necessarily reflect how a competent human would judge some situation, then we would always analyze the AI’s judgements in the same direction: “the AI told us to do X, so we should do X” and never “we shouldn’t do X, but the AI said to do X, so we should revise the AI”. That’d be cool! It’s not great to have computers making important decisions and having to wonder whether their judgements reflect an incompetent understanding of how to apply rules to the relevant situation! But, our ambiguity is unavoidable. It is impossible to make an algorithm that we know will competently recognize your face in every instance: inevitably there will be cases where a human’s judgement diverges from that of a computer in which we must either override the computer’s judgement (if we can) or we must surrender ourselves to a wanton misapplication of a rule.

Saul Kripke anticipated the problem of having a “machine” perfectly represent one’s intentions1 in his 1982 work Wittgenstein On Rules and Private Language:

The term 'machine' is here, as often elsewhere in philosophy, ambiguous. Few of us are in a position to build a machine or draw up a program to embody our intentions; and if a technician performs the task for me, the sceptic can ask legitimately whether the technician has performed his task correctly. Suppose, however, that I am fortunate enough to be such an expert that I have the technical facility required to embody my own intentions in a computing machine, and I state that the machine is definitive of my own intentions. Now the word ‘machine' here may refer to any one of various things. It may refer to a machine program that I draw up, embodying my intentions as to the operation of the machine. Then exactly the same problems arise for the program as for the original symbol'+': the sceptic can feign to believe that the program, too, ought to be interpreted in a quus-like manner. To say that a program is not something that I wrote down on paper, but an abstract mathematical object, gets us no further. The problem then simply takes the form of the question: what program (in the sense of abstract mathematical object) corresponds to the 'program' I have written on paper (in accordance with the way I meant it)? ('Machine' often seems to mean a program in one of these senses: a Turing 'machine', for example, would be better called a 'Turing program'.) Finally, however, I may build a concrete machine, made of metal and gears (or transistors and wires), and declare that it embodies ‘+': the values that it gives are the the function I intend by values of the function I intend. However, there are several problems with this. First, even if I say that the machine embodies the function in this sense, I must do so in terms of instructions (machine 'language', coding devices) that tell me how to interpret the machine; further, I must declare explicitly that the function always takes values as given, in accordance with the chosen code, by the machine. But then the sceptic is free to interpret all these instructions in a non-standard "quus”-like way. Waiving this problem, there are two others - here is where the previous discussion of the dispositional view comes in. I cannot really insist that the values of the function are given by the machine. First, the machine is a finite object, accepting only finitely many numbers as input and yielding only finitely many as output - others are simply too big. Indefinitely many programs extend the actual finite behavior of the machine. Usually this is ignored because the designer of the machine intended it to fulfill just one program, but in the present context such an approach to the intentions of the designer simply gives the septic his wedge to interpret in a non-standard way. Indeed, the appeal to the designer's program makes the physical machine superfluous; only the program is really relevant. The machine as physical object is of value only if the intended function can somehow be read off from the physical object alone.) Second, in practice it hardly is likely that I really intend to entrust the values of a function to the operation of a physical machine, even for that finite portion of the function for which the machine can operate. Actual machines can malfunction: through melting wires or slipping gears they may give the wrong answer. How is it determined when a malfunction occurs? By reference to the program of the machine, as intended by its designer, not simply by reference to the machine itself. Depending on the intent of the designer, any particular phenomenon may of may not count as a machine 'malfunction'. A programmer with suitable intentions might even have intended to make use of the fact that wires melt or gears slip, so that a machine that is 'malfunctioning for me is behaving perfectly for him. Whether a machine ever malfunctions and, if so, when, is not a property of the machine itself as a physical object but is well defined only in terms of its program, as stipulated by its designer. Given the program, once again the physical object is superfluous for the purpose of determining what function is meant. Then, as before, the sceptic can concentrate his objections on the program. The last two criticisms of the use of the physical machine as a way out of scepticism - its finitude and the possibility of malfunction - obviously parallel two corresponding objections to the dispositional account.

This line of thinking seems to suggest some problems for AI alignment researchers. If we can never perfectly represent a human intention in a computer program, how can we ensure 100% alignment between human values and the goals of computers? That’s a pessimistic spin on this. A perhaps more optimistic one is to note that the entire field of Computer Science only came about in the wake of two seemingly disappointing results in mathematics.

Kurt Godel proved that there is no consistent set of axioms from which you can derive all the theorems of arithmetic, and Alan Turing showed that there is no algorithm for determining whether or not an arbitrary mathematical statement is provable. In dashing the hopes of many early 20th century mathematicians, Godel and Turing developed the tools of Computer Science and kicked off the Information Age. Where mathematical logic had Godel’s incompleteness theorems and Turing’s solution to the decision problem, analytic philosophy offers a kind of impossibility theorem for the prospect of perfectly representing human intentions in a computer. In exploring this impossibility theorem, maybe we’ll sufficiently sharpen our tools so as to birth entirely new ways of thinking about AI and the mind.

Here’s a moonshot hypothesis about where this could go. Neuralink aspires to be able to transmit human thoughts and intentions telepathically. As we carefully elucidate the key concepts of the mind as Turing did for computation, maybe we’ll unlock technology like Neuralink aspires to build and more.

High and Low Culture

The commingling of high and low culture is supposed to be a defining feature of postmodernism and therefore somehow unique to our current cultural situation. This seems exactly wrong though - many of the best works of Western civilization borrow from both the high and low culture of the time. It actually seems like a good formula for creating something new and great. If you’re only working with the constraints of an already accepted high culture, there is little room for innovation. But, to meld the high culture with a folk culture or low culture requires a mastery of both and some innovative spirit, which can plausibly push whatever medium you’re working in forward. Knowing very little about music, my impression is that Mozart’s “The Magic Flute” (a Mozart collaboration with a vaudeville producer) and Tchaikovsky’s “The Rite of Spring” (which scandalously incorporates Russian folk music) are both highly regarded works of art and fit this profile. As we discussed in the last newsletter, Eliot’s Middlemarch, usually considered one of the best books ever written, is a prose variation on the epic that is focused on seemingly un-epic characters and so probably is an example as well. Dante’s Divine Comedy famously is written in the vernacular Italian instead of Latin. Even Shakespeare’s plays, today’s highest of high brow content, were, as English teachers love to cite, broadly popular across the various social classes. Far from being embarrassed about using elements of contemporary “low” culture, we should probably expect artists to do so and celebrate those who successfully keep art (and the insights of contemporary “high” culture) relevant and interesting.

Moral Alignment

In Dungeons and Dragons, characters can be categorized by their alignment along two axes: lawfulness and morality.

I was listening to the latest Tyler Cowen podcast where he interviews Ruth Scurr who recently wrote a biography of Napoleon and previously wrote biographies of Robespierre and John Aubrey. About halfway through the interview, they have the below exchange:

COWEN: By the time you get to the end of your John Aubrey book, which is called My Own Life, titled by you, you describe Aubrey as, and I quote, “a wonderful friend.” Could you say the same about Napoleon?

SCURR: Absolutely not. And by the way, that description of Aubrey is picking up on my first book with Robespierre where, at the very beginning of the book, I say that he has friends and enemies even to this day. Like Napoleon, he’s a very, very divisive figure. And that I had tried to be his friend — to see things from his point of view — but that friends, as he always suspected, have opportunities for betrayal that enemies only dream of.

Actually, my editor was quite nervous about — this was my first book, and she was very nervous about me putting that sentence into my introduction because she thought people might just think this was mad, to try and be Robespierre’s friend. And I insisted. It really mattered to me that if you give someone the benefit of the doubt, if you don’t start off vilifying them — even if they have been responsible for the Terror — then the portrait that you construct at the end of the day is going to be far, far more damning because you’ve included the good. You’ve included the things that there are to approve of; you’ve included those sides, and you haven’t started off basically telling people, “We’re going to write a book from this particular position.”

The literal impossibility of befriending someone who’s been dead for over 200 years aside, it’s interesting that Scurr tries to offer the most sympathetic perspective on Robespierre highlighting the good in him as a way of making his eventual evil in the Reign of Terror all the more damning. Is Scurr right that we judge the evil people do more harshly when we are also aware of the good they were capable of? Surely part of what makes the Harvey Dent/Two-Face story arc in The Dark Knight work is the focus on his incredible moral courage before his transformation into a villain. But, then again, the Joker in The Dark Knight is quite effectively portrayed as terrifyingly evil though his evil is presented without explanation or redemption. Do we reproach (fear? despise? what is the appropriate disposition to evil?) people we know to have good in them who have fallen more than those who simply seem to lack the capacity for compassion?

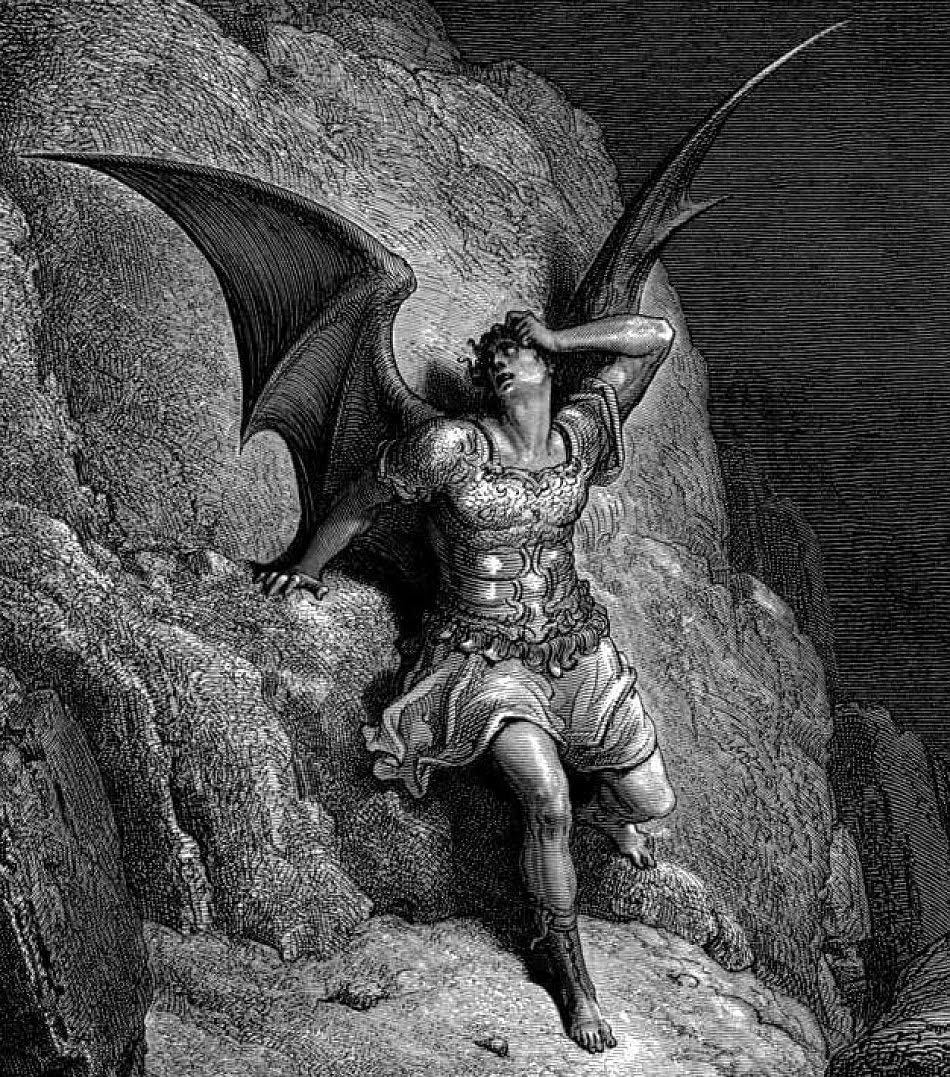

There are also different aims in evil that we may judge differently. There’s evil done in the service of an ideal, perhaps generously labeled a kind of noble confusion, and then there are some who just want to watch the world burn. Milton’s Satan doesn’t have a perverted notion of what is good; rather he explicitly chooses evil for its own sake, declaring “Evil, be thou my good”2.

D&D and my thoughts aside, people have been thinking about the types of evil for a long time. In The Republic, Plato describes five possible kinds of souls ranging from the aristocratic to the tyrannical, representing successive decline from virtue to degeneracy and vice. Today, I guess we don’t really use the word “evil”, but psychology gives us Big Five personality traits and the “dark triad”.

Scurr also mentions Napoleon in the quote above. It’s probably wrong to think of Napoleon as evil like Robespierre. Napoleon is most often, and I think accurately, thought of primarily as a “Great Person”. Greatness, of course, being orthogonal to good and evil, the significance of one’s acts having no moral valence itself. Where Napoleon represents the gap between greatness and goodness, I think Ulysses S Grant is a prime example of the possibility of their union.

Incidentally, apparently when Grant was asked which historical figures he detested the most, he offered Robespierre and Napoleon3.

If you have thoughts on any of the above, I’d love to discuss. Just reply to the newsletter email!

Here, specifically, wondering how to delineate the plus function from a similar function “quus” which acts the same as plus on all tuples of integers except for one

Or is Satan’s valuing of evil a kind of idealism? Maybe the opposite of the idealistic villain is the pragmatic egoist? Or is egoism, in the spirit of Ayn Rand, an ideal itself?

Anecdote from page 882 of Ron Chernow’s Grant